S2D Storage Tiers misconfiguration bug

I’ve been deploying a few Storage Spaces Direct (S2D) clusters lately, and I noticed a slight mis-configuration that can occur on deployment.

Normally when deploying S2D, the disk types in the nodes are detected and the fastest disk (usually NVMe or SSD) is assigned to the cache, while the next fastest is used for the Performance Tier and the slowest being used in the Capacity Tier. So if you have NVMe, SSD and HDD, you would end up with an NVMe Cache, a SSD Performance Tier and a HDD Capacity Tier. With only 2 disk types, either NVMe+SSD, NVMe+HDD or SSD+HDD, the fastest disk is used for Cache and the other used for both the Performance and Capacity Tiers.

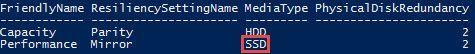

It seems there is a bug that is causing the Performance Tier to set its mediatype to the fastest disk in the system, even if that disk is already allocated to cache.

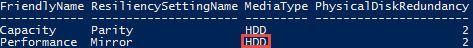

In my deployments, I’ve got 4x SSD and 12x HDD in each my hosts, with the expectation that the SSD is used for cache and the HDD is mirrored for the Performance Tier and in Parity for Capacity Tier. However even though the SSD is being assigned correctly to the Cache, the Performace Tier shows as an SSD mediatype, preventing me from deploying volumes with mirrored HDD until this is correctly.

You can check your own systems by running the below Powershell Command

Get-StorageTier | select FriendlyName, ResiliencySettingName, MediaType, PhysicalDiskRedundancy

If you’ve got the same problem, it can be very easily corrected by simply changing the mediatype on the Tier back to HDD

Get-StorageTier -FriendlyName Performance | Set-StorageTier -MediaType HDD

I’m sure there will be a permanent fix along for this soon, but in the mean time I hope this helps others who come across the same problem.